Digitalization and the development of artificial intelligence (AI) bring up many philosophical and ethical questions about the role of man and robot in the nascent social and economic order. How real is the threat of an AI dictatorship? Why do we need to tackle AI ethics today? Does AI provide breakthrough solutions? We ask these and other questions in our interview with Maxim Fedorov, Vice-President for Artificial Intelligence and Mathematical Modelling at Skoltech.

On 1–3 July, Maxim Fedorov chaired the inaugural Trustworthy AI online conference on AI transparency, robustness and sustainability hosted by Skoltech.

Maxim, do you think humanity already needs to start working out a new philosophical model for existing in a digital world whose development is determined by artificial intelligence (AI) technologies?

The fundamental difference between today’s technologies and those of the past is that they hold up a “mirror” of sorts to society. Looking into this mirror, we need to answer a number of philosophical questions. In times of industrialization and production automation, the human being was a productive force. Today, people are no longer needed in the production of the technologies they use. For example, innovative Japanese automobile assembly plants barely have any people at the floors, with all the work done by robots. The manufacturing process looks something like this: a driverless robot train carrying component parts enters the assembly floor, and a finished car comes out. This is called discrete manufacturing – the assembly of a finite set of elements in a sequence, a task which robots manage quite efficiently. The human being is gradually being ousted from the traditional economic structure, as automated manufacturing facilities generally need only a limited number of human specialists. So why do we need people in manufacturing at all? In the past, we could justify our existence by the need to earn money or consume, or to create jobs for others, but now this is no longer necessary. Digitalization has made technologies a global force, and everyone faces philosophical questions about their personal significance and role in the modern world – questions we should be answering today, and not in ten years when it will be too late.

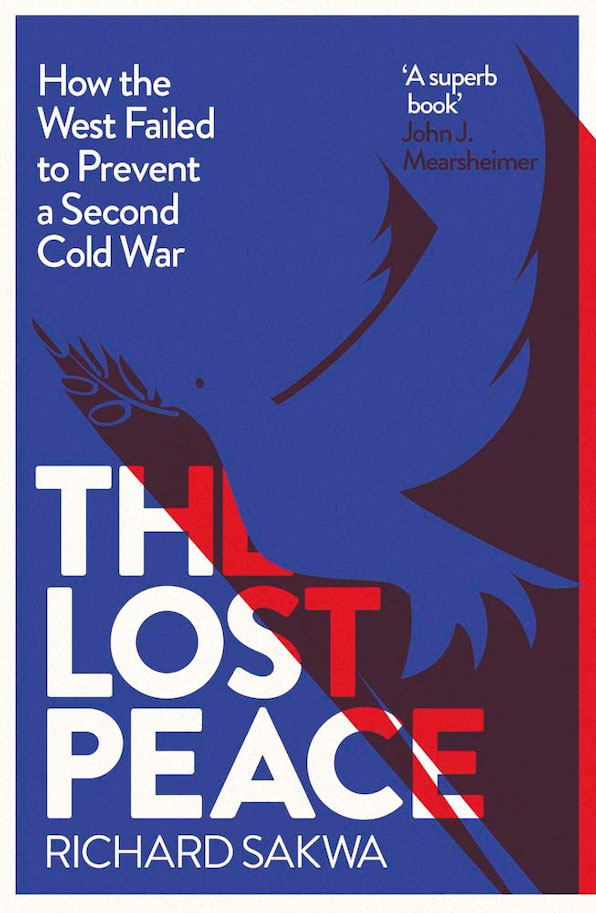

At the last World Economic Forum in Davos, there was a lot of discussion about the threat of the digital dictatorship of AI. How real is that threat in the foreseeable future?

There is no evil inherent in AI. Technologies themselves are ethically neutral. It is people who decide whether to use them for good or evil.

Speaking of an AI dictatorship is misleading. In reality, technologies have no subjectivity, no “I.” Artificial intelligence is basically a structured piece of code and hardware. Digital technologies are just a tool. There is nothing “mystical” about them either.

My view as a specialist in the field is that AI is currently a branch of information and communications technology (ICT). Moreover, AI does not even “live” in an individual computer. For a person from the industry, AI is a whole stack of technologies that are combined to form what is called “weak” AI.

We inflate the bubble of AI’s importance and erroneously impart this technology stack with subjectivity. In large part, this is done by journalists, people without a technical education. They discuss an entity that does not actually exist, giving rise to the popular meme of an AI that is alternately the Terminator or a benevolent super-being. This is all fairy tales. In reality, we have a set of technological solutions for building effective systems that allow decisions to be made quickly based on big data.

Various high-level committees are discussing “strong” AI, which will not appear for another 50 to 100 years (if at all). The problem is that when we talk about threats that do not exist and will not exist in the near future, we are missing some real threats. We need to understand what AI is and develop a clear code of ethical norms and rules to secure value while avoiding harm.

Sensationalizing threats is a trend in modern society. We take a problem that feeds people’s imaginations and start blowing it up. For example, we are currently destroying the economy around the world under the pretext of fighting the coronavirus. What we are forgetting is that the economy has a direct influence on life expectancy, which means that we are robbing many people of years of life. Making decisions based on emotion leads to dangerous excesses.

As the philosopher Yuval Noah Harari has said, millions of people today trust the algorithms of Google, Netflix, Amazon and Alibaba to dictate to them what they should read, watch and buy. People are losing control over their lives, and that is scary.

Yes, there is the danger that human consciousness may be “robotized” and lose its creativity. Many of the things we do today are influenced by algorithms. For example, drivers listen to their sat navs rather than relying on their own judgment, even if the route suggested is not the best one. When we receive a message, we feel compelled to respond. We have become more algorithmic. But it is ultimately the creator of the algorithm, not the algorithm itself, that dictates our rules and desires.

There is still no global document to regulate behaviour in cyberspace. Should humanity perhaps agree on universal rules and norms for cyberspace first before taking on ethical issues in the field of AI?

I would say that the issue of ethical norms is primary. After we have these norms, we can translate them into appropriate behaviour in cyberspace. With the spread of the internet, digital technologies (of which AI is part) are entering every sphere of life, and that has led us to the need to create a global document regulating the ethics of AI.

But AI is a component part of information and communications technologies (ICT). Maybe we should not create a separate track for AI ethics but join it with the international information security (IIS) track? Especially since IIS issues are being actively discussed at the United Nations, where Russia is a key player.

There is some justification for making AI ethics a separate track, because, although information security and AI are overlapping concepts, they are not embedded in one another. However, I agree that we can have a separate track for information technology and then break it down into sub-tracks where AI would stand alongside other technologies. It is a largely ontological problem and, as with most problems of this kind, finding the optimal solution is no trivial matter.

You are a member of the international expert group under UNESCO that is drafting the first global recommendation on the ethics of AI. Are there any discrepancies in how AI ethics are understood internationally?

The group has its share of heated discussions, and members often promote opposing views. For example, one of the topics is the subjectivity and objectivity of AI. During the discussion, a group of states clearly emerged that promotes the idea of subjectivity and is trying to introduce the concept of AI as a “quasi-member of society.” In other words, attempts are being made to imbue robots with rights. This is a dangerous trend that may lead to a sort of technofascism, inhumanity of such a scale that all previous atrocities in the history of our civilization would pale in comparison.

Could it be that, by promoting the concept of robot subjectivity, the parties involved are trying to avoid responsibility?

Absolutely. A number of issues arise here. First, there is an obvious asymmetry of responsibility. “Let us give the computer with rights, and if its errors lead to damage, we will punish it by pulling the plug or formatting the hard drive.” In other words, the responsibility is placed on the machine and not its creator. The creator gets the profit, and any damage caused is someone else’s problem. Second, as soon as we give AI rights, the issues we are facing today with regard to minorities will seem trivial. It will lead to the thought that we should not hurt AI but rather educate it (I am not joking: such statements are already being made at high-level conferences). We will see a sort of juvenile justice for AI. Only it will be far more terrifying. Robots will defend robot rights. For example, a drone may come and burn your apartment down to protect another drone. We will have a techno-racist regime, but one that is controlled by a group of people. This way, humanity will drive itself into a losing position without having the smallest idea of how to escape it.

Thankfully, we have managed to remove any inserts relating to “quasi-members of society” from the group’s agenda.

We chose the right time to create the Committee for Artificial Intelligence under the Commission of the Russian Federation for UNESCO, as it helped to define the main focus areas for our working group. We are happy that not all countries support the notion of the subjectivity of AI – in fact, most oppose it.

What other controversial issues have arisen in the working group’s discussions?

We have discussed the blurred border between AI and people. I think this border should be defined very clearly. Then we came to the topic of human-AI relationships, a term which implies the whole range of relationships possible between people. We suggested that “relationships” be changed to “interactions," which met opposition from some of our foreign colleagues, but in the end, we managed to sort it out.

Seeing how advanced sex dolls have become, the next step for some countries would be to legalize marriage with them, and then it would not be long before people starting asking for church weddings. If we do not prohibit all of this at an early stage, these ideas may spread uncontrollably. This approach is backed by big money, the interests of corporations and a different system of values and culture. The proponents of such ideas include a number of Asian countries with a tradition of humanizing inanimate objects. Japan, for example, has a tradition of worshipping mountain, tree and home spirits. On the one hand, this instills respect for the environment, and I agree that, being a part of the planet, part of nature, humans need to live in harmony with it. But still, a person is a person, and a tree is a tree, and they have different rights.

Is the Russian approach to AI ethics special in any way?

We were the only country to state clearly that decisions on AI ethics should be based on a scientific approach. Unfortunately, most representatives of other countries rely not on research, but on their own (often subjective) opinion, so discussions in the working group often devolve to the lay level, despite the fact that the members are highly qualified individuals.

I think these issues need to be thoroughly researched. Decisions on this level should be based on strict logic, models and experiments. We have tremendous computing power, an abundance of software for scenario modelling, and we can model millions of scenarios at a low cost. Only after that should we draw conclusions and make decisions.

How realistic is the fight against the subjectification of AI if big money is at stake? Does Russia have any allies?

Everyone is responsible for their own part. Our task right now is to engage in discussions systematically. Russia has allies with matching views on different aspects of the problem. And common sense still prevails. The egocentric approach we see in a number of countries that is currently being promoted, this kind of self-absorption, actually plays into our hands here. Most states are afraid that humans will cease to be the centre of the universe, ceding our crown to a robot or a computer. This has allowed the human-centred approach to prevail so far.

If the expert group succeeds at drafting recommendations, should we expect some sort of international regulation on AI in the near future?

If we are talking about technical standards, they are already being actively developed at the International Organization for Standardization (ISO), where we have been involved with Technical Committee 164 “Artificial Intelligence” (TC 164) in the development of a number of standards on various aspects of AI. So, in terms of technical regulation, we have the ISO and a whole range of documents. We should also mention the Institute of Electrical and Electronics Engineers (IEEE) and its report on Ethically Aligned Design. I believe this document is the first full-fledged technical guide on the ethics of autonomous and intelligent systems, which includes AI. The corresponding technical standards are currently being developed.

As for the United Nations, I should note the Beijing Consensus on Artificial Intelligence and Education that was adopted by UNESCO last year. I believe that work on developing the relevant standards will start next year.

So the recommendations will become the basis for regulatory standards?

Exactly. This is the correct way to do it. I should also say that it is important to get involved at an early stage. This way, for instance, we can refer to the Beijing agreements in the future. It is important to make sure that AI subjectivity does not appear in the UNESCO document, so that it does not become a reference point for this approach.

Let us move from ethics to technological achievements. What recent developments in the field can be called breakthroughs?

We haven’t seen any qualitative breakthroughs in the field yet. Image recognition, orientation, navigation, transport, better sensors (which are essentially the sensory organs for robots) – these are the achievements that we have so far. In order to make a qualitative leap, we need a different approach.

Take the “chemical universe,” for example. We have researched approximately 100 million chemical compounds. Perhaps tens of thousands of these have been studied in great depth. And the total number of possible compounds is 1060, which is more than the number of atoms in the Universe. This “chemical universe” could hold cures for every disease known to humankind or some radically new, super-strong or super-light materials. There is a multitude of organisms on our planet (such as the sea urchin) with substances in their bodies that could, in theory, cure many human diseases or boost immunity. But we do not have the technology to synthesize many of them. And, of course, we cannot harvest all the sea urchins in the sea, dry them and make an extract for our pills. But big data and modelling can bring about a breakthrough in this field. Artificial intelligence can be our navigator in this “chemical universe.” Any reasonable breakthrough in this area will multiply our income exponentially. Imagine an AIDS or cancer medicine without any side effects, or new materials for the energy industry, new types of solar panels, etc. These are the kind of things that can change our world.

How is Russia positioned on the AI technology market? Is there any chance of competing with the United States or China?

We see people from Russia working in the developer teams of most big Asian, American and European companies. A famous example is Sergey Brin, co-founder and developer of Google. Russia continues to be a “donor” of human resources in this respect. It is both reassuring and disappointing because we want our talented guys to develop technology at home. Given the right circumstances, Yandex could have dominated Google.

As regards domestic achievements, the situation is somewhat controversial. Moscow today is comparable to San Francisco in terms of the number, quality and density of AI development projects. This is why many specialists choose to stay in Moscow. You can find a rewarding job, interesting challenges and a well-developed expert community.

In the regions, however, there is a concerning lack of funds, education and infrastructure for technological and scientific development. All three of our largest supercomputers are in Moscow. Our leaders in this area are the Russian Academy of Sciences, Moscow State University and Moscow Institute of Physics and Technology – organizations with a long history in the sciences, rich traditions, a sizeable staff and ample funding. There are also some pioneers who have got off the ground quickly, such as Skoltech, and surpassed their global competitors in many respects. We recently compared Skoltech with a leading AI research centre in the United Kingdom and discovered that our institution actually leads in terms of publications and grants. This means that we can and should do world-class science in Russia, but we need to overcome regional development disparities.

Russia has the opportunity to take its rightful place in the world of high technology, but our strategy should be to “overtake without catching up.” If you look at our history, you will see that whenever we have tried to catch up with the West or the East, we have lost. Our imitations turned out wrong, were laughable and led to all sorts of mishaps. On the other hand, whenever we have taken a step back and synthesized different approaches, Asian or Western, without blindly copying them, we have achieved tremendous success.

We need to make a sober assessment of what is happening in the East and in the West and what corresponds to our needs. Russia has many unique challenges of its own: managing its territory, developing the resource industries and continuous production. If we are able to solve these tasks, then later we can scale up our technological solutions to the rest of the world, and Russian technology will be bought at a good price. We need to go down our own track, not one that is laid down according to someone else’s standards, and go on our way while being aware of what is going on around us. Not pushing back, not isolating, but synthesizing.

Interview by Anastasia Tolstukhina, Programme Coordinator and Website Editor at the Russian International Affairs Council