The outgoing year has demonstrated, on the one hand, continued growth in the power and capabilities of Artificial Intelligence (AI) relying on so-called “foundation models”, based on deep neural networks such as “transformers”. On the other hand, we were witnessing an entrenchment of certain trends of AI’s development that have to do with overcoming of well-known limitations of AI systems.

Problems of “explainability and interpretability” of AI based on deep neural network models, while raised in one of the previous reviews, have not been adequately addressed so far, meaning that reliable automation of critical infrastructure with the help of modern AI is not feasible as of yet.

The trend of creating the so-called “cognitive architectures” that implies a combination of modules performing various cognitive functions for the purpose of solving a more or less wide range of tasks, is still relevant. The most famous example of such architecture is the Alpha Go from DeepMind. In Russia, the most advanced robotics solutions in this field are created at AIRI research institute.

In humanitarian terms, the problem of AI security, as regards the unacceptability of Lethal Autonomous Weapons Systems (LAWS), stated in our previous review, has taken on a whole new dimension. The Armenian-Azerbaijani conflict, which broke out in 2020, turned out to be a “war of drones” (unmanned aerial vehicles or UAVs), with the victory going to the side that had the advantage.

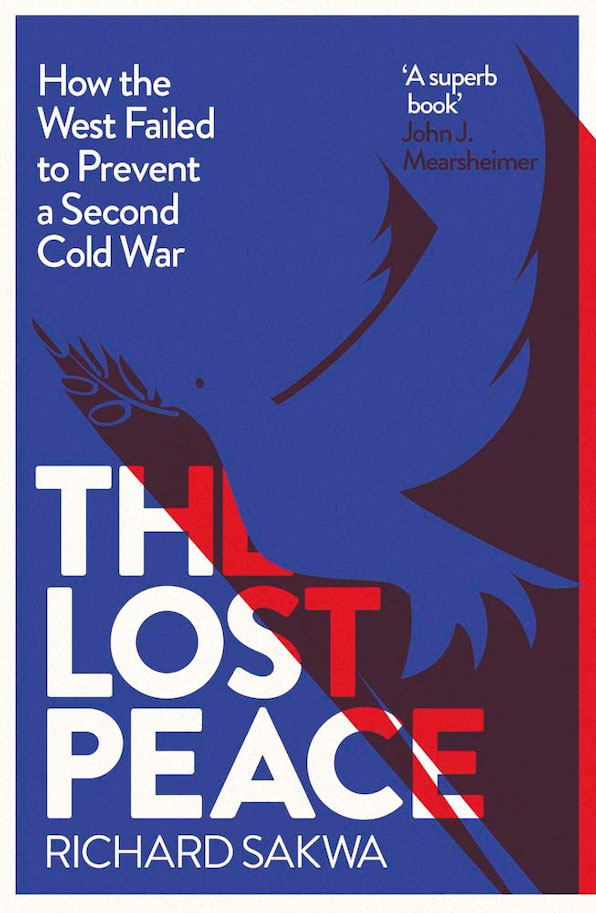

The further development of AI, undergoing rapid growth, on the one hand, requires new solutions and new thrusts. On the other hand, the aggravation of political, economic and military confrontation between the leading players on the world stage makes them even more dependent on the technological edge, or they might quickly lag behind. Finally, the prospective AI humanization is possible only following a radical stabilization of the international situation.

The outgoing year has demonstrated, on the one hand, continued growth in the power and capabilities of Artificial Intelligence (AI) relying on so-called “foundation models”, based on deep neural networks such “astransformers”. On the other hand, we were witnessing an entrenchment of certain trends of AI’s development that have to do with overcoming of well-known limitations of AI systems. This is happening against the backdrop of tectonic shifts in the political arena, potentially leading to the breakdown of unipolarity not only in the global economic space but also in the domain of technological infrastructure.

As we mentioned in last year’s review, “foundattioon models” still display their growing “human-level” capabilities to the unsophisticated user. A month ago, almost simultaneously, Facebook [1] and Google announced their “transformer” models, which are capable of generating semi-realistic videos based on textual entries. Somewhat little earlier, the Midjourney neural network enabled an American artist to win the first prize in a fine art competition held in the United States. This summer, it came to a point when a specialist from Google, who takes lead in this field, discovered signs of consciousness in AI and went public with his concerns about it, which led to him being fired for disclosing confidential information.

However, experts are well aware that almost all of the above-mentioned achievements would be impossible without a human specialist working as a “prompt engineering” and “cherry-picking” advisor. On the one hand, a human operator of an AI system manually picks words, phrases, word combinations and their sequences, aimed at obtaining the expected result, and, on the other hand, the same operator subjectively evaluates the quality and adequacy of the result, selecting only one of hundreds of proposed outcomes in the end. This is roughly how you do an Internet search, picking and combining words in a query until you find the desired information in the results. In a similar manner, fortune-tellers read coffee grounds, stirring them up until the resulting configuration of the grounds could be interpreted in a way that would be convincing to the client.

In the meantime, the combination of deep neural network technology based on the “transformer” architecture with an “attention” mechanism is securing more and more applications in areas other than text processing, for which it was originally designed. A wide range of such applications was presented earlier this year at the annual Russian OpenTalks.AI conference. Virtually all such applications either involve severe limits on the range of tasks solved (e.g., face or traffic sign recognition) with large amounts of manually prepared training data or imply uses where errors do not appear to be critical—such as the generation of images and video sequences or creating conversational chat bots in entertainment and leisure industries.

Problems of “explicability and interpretability” of AI based on deep neural network models, while raised in one of the previous reviews, have not been adequately addressed so far, meaning that reliable automation of critical infrastructure with the help of modern AI is not feasible as of yet. In turn, full-scale implementation of “foundation models” requires computational resources that are beyond the reach of even large corporations, except for major IT companies and banks.

Alongside the progress outlined above, more and more “opinion shapers” in today’s scientific community are focusing both on the “inherent problems” of the classical deep neural network-based approach and on the possible ways of overcoming those problems. For example, Yann LeCun, the “thought leader” from one of the world’s leading IT companies, describes in his recent seminal paper the current situation in AI as “a cul-de-sac”, analyzing ways out of this “blind alley” in great detail. He sees the need for “structural thinking” based on the hierarchical neuro-symbolic representations discussed in our previous review as one of development vistas. Another important thrust is the construction of energy-efficient models, roughly in the same vein as Pei Wang, one of the authors of the Artificial General Intelligence (AGI) term, proposed over 20 years ago in his Non-Axiomatic Reasoning System NARS, operating in a resource-constrained environment.

We should remind that the generalized canonical definition of general (strong) artificial intelligence, based on the private definitions of Ben Goertzel, Pei Wang, Shane Legg and Marcus Hutter, is “the ability to achieve complex goals in complex environments using limited resources”.

The need for “structural reasoning” also stems from the recent work done by a team involving Jürgen Schmidhuber, another “authority” on neural networks, the “inventor of LSTM”, where the effectiveness of machine learning was experimentally demonstrated by identifying relatively high-level behavioral primitives (invariants) based on relatively low-level spatiotemporal data obtained during unsupervised learning. Similar results were obtained at the NSU and presented at the AGI-2021 conference last year. In the latter work, it was shown—both experimentally and quantitatively—that successful learning of the same reinforcement learning task under identical conditions, when parameterized at the object level or at the level of individual pixels, occurs in the same number of learning environment cycles, but computational costs in the former case are abysmally lower than in the latter instance. Moreover, the time input in the second case is unacceptably high for physical implementation, indicating the practical need, in terms of efficiency, to build complex—at least, two-level—learning schemes, where the system learns primitives of the environment at the first level, while it learns more complex behavioral programs based on these primitives at the second level. Here we arrive at the fundamental role of cost-effectiveness, whose critical importance has long been touted by Pei Wang to be presently highlighted by Yann LeCun.

An even more fundamental basis for the strong AI in terms of theoretical physics is provided by a team of authors led by Karl Friston in this year’s book, where he justifies intelligent behavior of living beings, including humans, by the fundamental principle of minimizing the so-called “free energy”. Without going into philosophy, physics or mathematics, this principle, very roughly, predetermines the tendency of an “intelligent or reasonable system” to reduce the energy it incurs, or to mitigate uncertainty or reduce unpredictability it experiences. Interestingly enough, despite an apparent semantic similarity of such parameters as probability and predictability, we demonstrated in our recent work, on the example of unsupervised learning for segmenting of natural language texts, significant differences in the accuracy of models based on probability and uncertainty metrics, with uncertainty-based models being much more efficient in terms of text segmentation quality. At the same time, the vast majority of current machine learning models are based on maximizing the prediction probability, so these works pave the way for new fundamental and applied research.

At the Annual AGI-2022 Conference on strong AI, held in August, Ben Goertzel, in his opening address, quite accurately described the current moment in its development. This moment can be seen as reaching the frontiers laid back 25 years ago, when the above-mentioned principles, including “structural reasoning”, “neuro-symbolic integration”, “cost-effectiveness”, “interpretability”, along with several others, under slightly different names and definitions, formed the basis of the first strong AI Webmind project (1997-2001). It was a starting point in professional careers of many prominent contemporary researchers and developers in the field. Today, however, a return to these principles is taking place at a totally different level of computing resource availability and power as well as the development of neural network methods based on “deep” neural networks and understanding of their fundamental strengths and weaknesses.

The trend of creating the so-called “cognitive architectures” that implies a combination of modules performing various cognitive functions for the purpose of solving a more or less wide range of tasks, is still relevant. The most famous example of such architecture is the Alpha Go from DeepMind. In Russia, the most advanced robotics solutions in this field are created at AIRI research institute. The original neuro-symbolic cognitive architecture for decision-making systems pivoted on the fundamental principles of brain activity, implemented in formal mathematical methods has been presented this year by a joint team of the Neurosciences Laboratory of Sberbank and NSU at two international conferences – Biologically Inspired Cognitive Architectures (BICA-2022) and General/Strong AI (AGI-2022). The latter conference also introduced an innovative cognitive architecture that reproduces the functional structure and architecture of the human brain with the purpose of creating an operating system for robots based on the artificial psyche of МIPT developers.

A separate trend worthy of note and related both to the problem of strong AI and to the problem of cost-effectiveness is the so-called neuromorphic computing architectures—specifically, pulsed neural networks. The fact is that any modern solutions based on up-to-date classical computing architectures, including artificial neural networks implemented on “central processors”, multi-core multiprocessor systems and even on graphic cards, have both extremely high energy consumption and an extremely low degree of parallel processing, as compared to the computational properties of animal central nervous systems. The most advanced computing systems which are not yet even comparable with mammals in terms of intellectual capabilities, already exceed the energy consumption of the human biological brain by many orders of magnitude. Pulsed neural networks implemented in neuromorphic chips could hypothetically help bridge this gap.

One crucial factor is that the complex cognitive tasks performed by animals and humans are extremely sophisticated and usually require sorting out a large number of options to select the optimal one. Within traditional architectures, this calls for some form of enumeration, and it may happen that an acceptable option can be either the first or the last in the list of those to be analyzed, which is not known in advance, so we have to run through all variants, and in case of classical neural networks, to figure out activations of all neurons in all layers of a deep neural network. In case of massive parallel processing of cognitive tasks both in the cerebral cortex, and in impulse neural networks, all variants are searched simultaneously (in an asynchronous manner) in all layers, with the “winning” variant starting to suppress “alternatives” at some point, whereas “the enumeration of all possibilities” is not required in most cases. Furthermore, representation of information at the level of single pulses instead of transmitting multibit machine words is another factor potentially reducing power consumption by several orders of magnitude. Those interested in this subject may read Mikhail Kiselev’s last-month report.

The last report, as well as other works in the field of strong and general AI (AGI), mentioned above, have been discussed weekly for the past few years at the online seminars of the Russian-speaking community of developers of strong AI — AGIRussia and its groups in Telegram; the discussion materials are available on the community’s YouTube channel.

Unfortunately, this year’s political situation has not only hampered international scientific communications, destroying a large number of industrial and economic ties in AI research and development, but it has also aggravated the disastrous situation with Russia’s strategic technological lag in this field as compared to the United States and China. The Artificial Intelligence Almanac 2021 ranks Russia 17-22 in the world in terms of scientific publications and patents, tens and hundreds of times behind the leaders—China and the United States. Unfortunately, the resources used in our country for the development of relevant areas and implementation of the above-mentioned projects are negligible as compared to the allocations of Western IT giants and the Chinese government. Under the pressure of sanctions and the destruction of global economic ties, this technological backwardness of Russia can be irreversible unless extraordinary measures are taken.

In humanitarian terms, the problem of AI security, as regards the the unacceptability of Lethal Autonomous Weapons Systems (LAWS), stated in our previous review, has taken on a whole new dimension. The Armenian-Azerbaijani conflict, which broke out in 2020, turned out to be a “war of drones” (unmanned aerial vehicles or UAVs), with the victory going to the side that had the advantage. The conflict in Ukraine has been described as a “drone war” by Elon Musk, and that of a very different scale, as we can tell from the news reports. A search on the Internet for “drone war, Ukraine” gives us an opportunity to assess the drama of the situation in its entirety, both in terms of the areas of application and in terms of providing the troops with these technical capabilities. In this case, the presence or absence of even imperfect modern UAVs with fully manual control or simply guided by GPS proves decisive for the outcome of military-technical operations of various classes. The same can be said about UAV counter-systems, so we can expect that the development of the technological arms race in the foreseeable future will be on the edge of UAV development—surface, underwater and ground “drones”, including LAWS, as well as UAV counter-systems.

In the latter case, the ability to operate autonomously against jamming or heavy clutter in a changeable operational environment may be among the decisive “competitive advantages” in this race. With that said, cost-effectiveness of UAVs will be crucial in their practical applicability due to the direct relationship between power consumption, range, take-off weight, and payload. Given that even existing international arms control agreements are denounced, it is still difficult to expect progress in LAWS control, including loitering munitions, kamikaze drones, and their forthcoming autonomous versions with independent target selection and strike decision-making.

Further complicating the situation is the explosive growth in demand for UAVs and the dramatic increase in profits of the companies producing them. As a result, banning the development and use of LAWS seems almost impossible—at least, until the current conflict is over and the entire global international security system is radically revised. In the meantime, states with greater UAV and LAWS capabilities will have significant military and technical advantages, and those that produce them will have an economic edge.

In conclusion, it can be stated that the further development of AI, undergoing rapid growth, on the one hand, requires new solutions and new thrusts. On the other hand, the aggravation of political, economic and military confrontation between the leading players on the world stage makes them even more dependent on the technological edge, or they might quickly lag behind. Finally, the prospective AI humanization is possible only following a radical stabilization of the international situation.

1. Meta, a company that owns Facebook, has been recognized as extremist and banned in Russia.