Artificial Intelligence: Time of the Weak

(votes: 2, rating: 5) |

(2 votes) |

Research fellow at TU Dortmund University, specialist in autonomous decision-making systems, RIAC Expert

The term “artificial intelligence (AI) normally denotes a computer program, i.e. an algorithm, that is capable of solving problems which an adult human brain can solve. The International Dictionary of Artificial Intelligence denotes AI as the area of knowledge that deals with the development of technologies that enable computing systems to operate in a way that resembles intelligent behaviour, including the behaviour of humans. It should be noted that this definition is phenomenological; it leaves the notions of intellect and intelligent behaviour to be detailed by philosophy. Given the shortage of knowledge about the brain and the cognitive apparatus of biological systems, the notion of AI defies more precise mathematical formalization.

At present, AI research is normally understood as the use of algorithms to solve problems that require cognitive strain. Such problems used to imply (and partially still imply) games such as chess and Go, handwriting recognition, machine translation and creative work. In the eyes of the general public, each of these problems was originally perceived as the last remaining obstacle to the creation of a “true” AI, one that would be able to replace humans in all fields of expertise. In reality, it turned out that teaching a computer to play chess is much easier than teaching it to play football, and that even if we know how to teach a computer to play football this knowledge is difficult to apply to machine translation. This is why, following an initial peak in enthusiasm, the scientific community subdivided the notion of AI into the strong and weak subcategories. Weak AI implies an algorithm capable of solving highly specialized problems (such as playing chess), whereas a strong AI can be used to solve a broader range of problems; ideally, it should be capable, at the very least, of everything an adult human is capable of (including arriving at logical conclusions and planning its future actions). Strong AI is used interchangeably in literature with artificial general intelligence (AGI).

One thing worth noting is that the strong–weak classification of AI algorithms is not set in stone: as of early 2016, it was believed that playing Go required a strong AI. After the impressive win of the AlphaGo algorithm over the current human world champion in March 2016, this problem was relegated to the domain of weak AI.

The following main trends in AI research can be identified as of the late 2010s.

Machine Learning and Pattern RecognitionSearching data for consistent patterns, such as classifying objects in photographs as backgrounds, humans, vehicles, buildings or plants.

Planning and InferenceProving logical statements and planning actions for the purpose of achieving a certain goal, based on the knowledge of the logical laws that allow this goal to be achieved. One example would be synthesizing sensor data to assess the traffic situation for the purposes of controlling a vehicle in an effective way.

Expert SystemsSystematizing data together with logical connections, knowledge mapping and answering semantic questions such as “What is the share of energy prices in the production costs for the Irkut MC-21 aircraft?”

Introduction

The term “artificial intelligence (AI) normally denotes a computer program, i.e. an algorithm, that is capable of solving problems which an adult human brain can solve. The International Dictionary of Artificial Intelligence [18] denotes AI as the area of knowledge that deals with the development of technologies that enable computing systems to operate in a way that resembles intelligent behaviour, including the behaviour of humans. It should be noted that this definition is phenomenological; it leaves the notions of intellect and intelligent behaviour to be detailed by philosophy. Given the shortage of knowledge about the brain and the cognitive apparatus of biological systems, the notion of AI defies more precise mathematical formalization.

At present, AI research is normally understood as the use of algorithms to solve problems that require cognitive strain [2, 10]. Such problems used to imply (and partially still imply) games such as chess and Go, handwriting recognition, machine translation and creative work. In the eyes of the general public, each of these problems was originally perceived as the last remaining obstacle to the creation of a “true” AI, one that would be able to replace humans in all fields of expertise. In reality, it turned out that teaching a computer to play chess is much easier than teaching it to play football, and that even if we know how to teach a computer to play football this knowledge is difficult to apply to machine translation. This is why, following an initial peak in enthusiasm, the scientific community subdivided the notion of AI into the strong and weak subcategories. Weak AI implies an algorithm capable of solving highly specialized problems (such as playing chess), whereas a strong AI can be used to solve a broader range of problems; ideally, it should be capable, at the very least, of everything an adult human is capable of (including arriving at logical conclusions and planning its future actions). Strong AI is used interchangeably in literature with artificial general intelligence (AGI) [28].

One thing worth noting is that the strong–weak classification of AI algorithms is not set in stone: as of early 2016, it was believed that playing Go required a strong AI. After the impressive win of the AlphaGo algorithm over the current human world champion in March 2016 [26], this problem was relegated to the domain of weak AI.

The following main trends in AI research can be identified as of the late 2010s.

Machine Learning and Pattern RecognitionSearching data for consistent patterns, such as classifying objects in photographs as backgrounds, humans, vehicles, buildings or plants.

Planning and InferenceProving logical statements and planning actions for the purpose of achieving a certain goal, based on the knowledge of the logical laws that allow this goal to be achieved. One example would be synthesizing sensor data to assess the traffic situation for the purposes of controlling a vehicle in an effective way.

Expert SystemsSystematizing data together with logical connections, knowledge mapping and answering semantic questions such as “What is the share of energy prices in the production costs for the Irkut MC-21 aircraft?”

Current Status and Prospects

The objective of creating a strong AI has yet to be achieved. In fact, the scientific community has gone perceptibly cold on AI following the so-called AI winter of the mid-1980s, which was brought about by the initially inflated expectations. This resulted both in the disillusionment among potential AI users and in the insufficient performance of software [29]. Considerable progress has been achieved in more specialized fields since the mid-2000s. This is primarily due to the continuous evolution of computing equipment: whereas in 2001 the industry’s flagship Intel Pentium III processor demonstrated the highest level of performance across the board at 1.4 billion arithmetic operations per second, its descendant, the Intel Core i5 of 10 years later was capable of 120 billion operations per second, i.e. nearly 100 times more [77, 9]. This growth was to a significant extent spurred by the video gaming and computer graphics industry, as the race for ever more realistic imagery turned GPUs from peripheral hardware into powerful computing systems capable of not just processing graphical data, but also of random parallel computing tasks (albeit of relatively low complexity). In 2011, the peak performance of the Radeon HD 6970 video card stood at 675 million operations per second [11].

The other important driver of the industry was the capability to digitize and manually classify texts, images and audio files, and also to create comprehensive digital knowledge bases. The accessibility of massive volumes of properly classified data allowed for large samples to be used in the training of machine-leaning algorithms. The classifying precision increased [22], turning machine translation from a coarse tool into a broadly applicable solution. For example, Google Translate utilises a vast base of user-supplied parallel texts, i.e. identical texts in different languages, which makes it possible to teach the system and improve the quality of translation on the go [8].

These two factors combined cut bulk data processing times substantially, thus facilitating computations of ever greater complexity within reasonable timeframes. By early 2018, AI had reached a number of widely publicized milestones and made its way into various sectors of the economy. It would, however, be a mistake to perceive AI as a silver bullet for all of humanity’s problems.

AI Breakthroughs

Expert Systems and Text Recognition: IBM Watson and Jeopardy!Perhaps the most widely known example of AI is the IBM Watson expert system, which combines a vast bulk of knowledge (i.e. data and the associated semantic relations between them) and the capacity for processing database requests in English. In 2011, IBM Watson won an impressive victory over the reigning human champions of the TV quiz Jeopardy! [i]. This achievement, which demonstrated Watson’s ability to process and structure information, helped IBM break into the expert system market.

Neural Networks: Google DeepMind’s Win at GoThe growth in computational performance made a significant contribution to the development of artificial neural networks, which had been conceptualized back in the mid-1940s [16]. Contemporary technology makes it possible to teach large neural networks, and the complexity of problems that can be solved directly depend on the size of the network. These deep learning systems stand out for their structure, which makes it possible to first recognize the local details of input data (such as the difference in the colour of neighbouring pixels) and then, as the data is processed, their global properties (such as lines and shapes). Google engineers created and trained a neural network based on such an architecture that could play the game Go. The algorithm then unexpectedly beat the reigning human world champion [26]. Other research teams achieved similar results with video games like StarCraft II [27].

Practical Applications of AI

As of early 2018, machine learning, pattern recognition and autonomous planning had spilled over from research labs into the commercial market. The first users of the new technology were the militaries on either side of the Iron Curtain, which had been interested in automated planning solutions ever since the 1950s [15, 31]. Economists embraced the planning technology at roughly the same time. Listed below are several examples of how AI algorithms are currently used.

- IBM offers its Watson solution to a variety of economic sectors: health professionals can use it to diagnose patients and recommend treatment; lawyers can use it to classify specific cases as per the legislation; and railway personnel can use it to detect fatigue in tracks and rolling stock [33].

- In medicine, pattern recognition makes it possible to identify and classify internal organs when planning surgeries [4].

- Online shops use machine-learning mechanisms to target products to regular clients [3].

- Autonomous robotized museum guides [1] conduct guided tours and answer visitors’ questions.

- The military is introducing the first elements of autonomous decision-making technology. Short-range air-defence and anti-missile systems leave humans out of the decision-making loop due to the long reaction times of human operators, and advanced anti-ship missile systems assign targets to missiles depending on their priority.

The Prospects of AI Application

It is expected that AI will be capable of solving even more problems in the 2020s. Listed below are some of these problems, complete with the projected R&D progress.

Driverless VehiclesThe greatest problem associated with the development of driverless vehicles is to make them navigate road traffic despite the manoeuvring constraints and the multitude of possible situations on the road. As of 2018, the most developed solution in the market is the Audi A8 autopilot, which can successfully navigate traffic jams on motorways [20]. In March 2017, BMW promised to roll out a vehicle by 2021 that would be able to deliver passengers to their destinations without human intervention [19].

Military ApplicationsThe most promising military application for AI would be automated target recognition and tracking capability for robotic platforms and, consequently, the ability of such ability to engage targets autonomously [2]. As of late 2017, first-tier countries were conducting a number of research efforts in this field, primarily as applied to ground-based, sea-surface and submarine weapon systems.

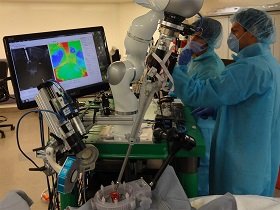

Robotic SurgerySurgery (including microsurgery, which requires precise manipulations) is expected to become automated in the near future. The robotized technology demonstrator STAR presented in 2016 [24] is capable of performing soft-tissue surgeries. Further achievements in pattern recognition could result in all soft tissue surgeries becoming robotized. This would make surgical interventions more affordable while reducing the workload of personnel.

Problems Still Unsolved

Despite computer algorithms appearing to be omnipotent, there are still problems that they either cannot solve or are poor at solving. In particular, it is difficult, for obvious reasons, to make them solve problems that are hard to formalize, such as writing a novel or picking the most beautiful photo from a selection of pictures. In fact, even problems that can be formalized with mathematical precision do not necessarily yield themselves to AI solutions with guaranteed success. This may be due to the complexity of mathematical modelling as applied to lower-level tasks (say, modelling a robot’s movements when teaching it to play football), the complexity of the problem itself (for example, there are no algorithms significantly better for making logical conclusions and proving mathematical statements than the complete exhaustion of all possible logical chains [30]), the vast number of parameters and the imprecision of the observable world (e.g. as applied to football), as well as the insufficient capacity of computational systems as compared to the human brain. Indeed, the interaction of the brain’s 1×1011 neurons cannot be simulated by algorithms. As of early 2018, the best science had come up with was the simulation of 1.7x109 neurons with a 2400-fold delay in 2013 [34]. In fact, even simulating the required number of brain cells may not necessarily replicate actual brain activity in a computer model.

The drawbacks of machine learning merit special mention. As a rule, machine learning requires the existence of pre-classified training data that an algorithm would analyse for patterns. Data shortages may result in situations in which input data does not belong to any of the classes learnt by the algorithm. The recognition of a new phenomenon at input and the creation of a new class of objects is a difficult task to solve at best [23], and will become even more complex if the algorithm is made to learn actively during the classifier’s operation and the recognized classes are rendered temporarily changeable. One other significant shortcoming of machine learning is the extreme sensitivity of algorithms: for example, it is possible to cheat facial recognition software by putting on a pair of glasses [25]. In some instances, a photograph may be classified erroneously if it has been subjected to changes imperceptible to the human eye: for instance, a seemingly insignificant manipulation with a photo of a panda may lead an algorithm to mistake the animal for a monkey [13].

Computers may be successful at solving such “complex” problems as symbolic and numerical computations and even at beating chess grandmasters, but many fairly simple problems remain unsolved. These include classifying “unknown” images without prior training with the use of pre-classified samples (such as recognizing images of apples for an algorithm that is only aware of cherries and pears), motility and rational reasoning. This phenomenon is known as Moravec’s paradox [17]. To a great extent it reflects human perception: the abilities commanded by any adult human being, those which are based on millions of years of evolution, are taken for granted, whereas mathematical problems like finding the shortest route on a map appear unnatural, and their solutions nontrivial.

AI Myths

The 50 years of research into AI have given birth to a multitude of myths and misconceptions, related to both the technology’s capabilities and to its shortcomings. For example, one statement perpetuated in the field of machine translation is that a low-quality translation tool from the 1960s turned the phrase “The spirit is strong but the flesh is weak” into “The vodka is good but the meat has gone rotten” when translated into Russian and then back into English. In fact, this particular example was originally used to illustrate the incorrect translation made by a human who was only armed with a dictionary, a basic knowledge of grammar and a runaway imagination; there is no hard evidence that machine translation software has ever performed in this manner [14].

Let us enumerate several myths which distort the public perception of AI.

AI Nationalism and AI Nationalization Are ahead

“Any sufficiently advanced technology is indistinguishable from magic,” Arthur C. Clarke once said. Fair enough, but contemporary AI research is based on mathematics, robotic technology, statistics and information technology. From what is mentioned earlier in this article, it follows that any software or hardware–software complex incorporating AI capability is primarily intended to solving several mathematical problems, often based on pre-collected and pre-classified data.

AI is a Computer BrainThe perception of AI as an anthropomorphic mechanism, one similar in many respects to the human brain, is firmly ingrained in contemporary culture. In reality, however, even neural networks are a mathematical abstraction that has very little in common with the biological prototype. Other models, like Markov chains, have no biological equivalents whatsoever. The existing algorithms are not aimed at modelling human consciousness; rather, they specialize in solving specific formalized problems.

There are a Limited Number of Applications for AIAccording to the stereotype fed by popular culture, AI is rarely involved in anything but planning in the interests of major corporations or governments. In fact, the aforementioned capabilities allow AI technologies to be harnessed for the benefit of broad strata of the population: everyone can avail of the algorithms used in real life, and the range of problems addressed by machine learning includes fairly routine assignments like assessing the need for repairs across a taxi cab fleet or planning the optimal use of bank teller halls.

AI-Related DangersAs a rule, apocalyptic examples of science fiction paint a gloomy picture of AI deciding, at some point, to destroy humankind, with scientists either being unable to prevent this scenario or remaining blissfully unaware of the danger. In real life, the scientific community has long been discussing the hazards associated with super-intelligent software [6]. The key identified risk is believed to consist in the incorrect tasking of a strong AI that would command considerable computational power and material resources while disregarding the interests of people. The aforementioned work by Nick Bostrom offers possible solutions to this problem.

The Reality of Strong AIThe excessively high or low expectations of scientific and technical progress result in conflicting forecasts of a strong AI becoming available any day now (next year at the latest) – or never. In reality, the creation of a strong AI is difficult to predict: this depends on whether or not several engineering and mathematical problems of unknown complexity will be solved successfully. As illustrated by the Fermat Last Theorem, the solution to which only emerged 350 years it was first proposed, no one can offer any exact date for the creation of a strong AI.

International Trends

Close Encounters of the Third Millenniumd

The expansion of AI’s range of applications has prompted an increased interest of military and security circles in the capabilities of autonomous systems. Research and development related to the aforementioned possible applications has provoked heated international debates on the expedience of restricting or even banning robotized systems altogether. The Campaign to Stop Killer Robots has been particularly prominent in this respect [32]: its supporters demand a full ban on the development of autonomous combat systems out of ethical considerations. In this light, it is worth mentioning not just combat robots, but also classification systems, which inform decisions to use force based exclusively on metadata, without any regard for even the content of suspects’ messages [21].

This heightened public attention on autonomous combat systems has resulted in talks in the framework of the UN Convention on Prohibitions or Restrictions on the Use of Certain Conventional Weapons Which May Be Deemed to Be Excessively Injurious or to Have Indiscriminate Effects. The document covers anti-personnel mines and blinding lasers. However, as of early 2018, the diplomatic process had not resulted in any mutual obligations [12]. This is to a great extent due to the difficulty in defining the notion of an autonomous combat system, the existence of systems (primarily air-defence and anti-missile systems) which meet the possible definition of such systems, and the unwillingness of governments to give up on the promising technology [2]. Nevertheless, it would be an oversimplification to restrict the arguments in favour of establishing control over autonomous weapons exclusively to the domain of morality and ethics. Strategic stability is also at risk [5]: first, the use of autonomous systems could lead to uncontrolled escalation of armed conflicts with unpredictable consequences; second, exports of such systems would be difficult to control. Indeed, the handover of a cruise missile is hard to conceal, and its range is limited by its physical properties, whereas control over software code is impossible: the use of AI algorithms is not limited to military applications, and restrictions on research into software with such a broad range of possible applications, possibly including a ban on scientific publications, would inevitably be resisted by the scientific community, which largely survives thanks to international cooperation and major conferences.

Bibliography

1. Diallo A.D., Gobee S., Durairajah V. Autonomous Tour Guide Robot using Embedded System Control // Procedia Computer Science. 2015. (76), pp. 126–133.

2. Boulanin V., Verbruggen M., SIPRI Mapping the Development of Autonomy in Weapon Systems / V. Boulanin,M. Verbruggen, SIPRI, Solna: SIPRI, 2017.

3. Aggarwal C. C. Recommender Systems / C.C. Aggarwal, Heidelberg: Springer International Publishing, 2016.

4. Alpers J. [et al]. CT-Based Navigation Guidance for Liver Tumor Ablation // Eurographics Workshop on Visual Computing for Biology and Medicine. 2017.

5. Altmann J., Sauer F. Autonomous Weapon Systems and Strategic Stability // Survival. 2017. No. 5 (59), pp. 117–142.

6. Bostrom N. Superintelligence: Paths, Dangers, Strategies / N. Bostrom, first edition, Oxford, UK: Oxford University Press, 2014.

7. Intel Corporation. Intel® Core i5-2500 Desktop Processor Series // Intel Corporation [website]. 2011. URL: https://www.intel.com/content/dam/support/us/en/documents/processors/corei5/sb/core_i5-2500_d.pdf (accessed on 11.03.2018).

8. DeNero J., Uszkoreit J. Inducing Sentence Structure from Parallel Corpora for Reordering // Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing (EMNLP). 2011.

9. Dongarra J.J. Performance of Various Computers Using Standard Linear Equations Software. // 2014. URL: http://www.netlib.org/benchmark/performance.pdf (accessed on 11.03.2018).

10. Floreano D., Mattiussi C. Bio-Inspired Artificial Intelligence: Theories, Methods, and Technologies / D. Floreano, C. Mattiussi, The MIT Press, 2008.

11. Geeks3D. AMD Radeon and NVIDIA GeForce FP32/FP64 GFLOPS Table // // Geeks3D [website]. 2014. URL: http://www.geeks3d.com/20140305/amd-radeon-and-nvidia-geforce-fp32-fp64-gflops-table-computing/ (accessed on 11.03.2018).

12. The United Nations Office at Geneva. CCW Meeting of High Contracting Parties // 2017. URL: https://www.unog.ch/80256EE600585943/httpPages)/A0A0A3470E40345CC12580CD003D7927?OpenDocument (accessed on 11.03.2018).

13. Goodfellow I., Shlens J., Szegedy C. Explaining and Harnessing Adversarial Examples // International Conference on Learning Representations. 2015.

14. Hutchins J. “The Whisky Was Invisible,” or Persistent myths of MT // MT News International. 1995. (11), pp. 17–18.

15. Keneally S. K., Robbins M. J., Lunday B. J. A Markov Decision Process Model for the Optimal Dispatch of Military Medical Evacuation Assets // Health Care Management Science. 2016. No 2 (19), pp. 111–129.

16. McCulloch W. S., Pitts W. A Logical Calculus of the Ideas Immanent in Nervous Activity // The Bulletin of Mathematical Biophysics. 1943. No 4 (5), pp. 115–133.

17. Moravec H. Mind Children: The Future of Robot and Human Intelligence / H. Moravec, Harvard, MA: Harvard University Press, 1990.

18. Raynor W. Jr. International Dictionary of Artificial Intelligence / W. Raynor Jr., 2nd edition, London, United Kingdom: Global Professional Publishing, 2008.

19. Reuters. BMW Says Self-Driving Car to be Level 5 Capable by 2021 // Reuters [website]. 2017. URL: https://www.reuters.com/article/us-bmw-autonomous-self-driving/bmw-says-self-driving-car-to-be-level-5-capable-by-2021-idUSKBN16N1Y2 (accessed on 11.03.2018).

20. Ross P. E. The Audi A8: The World’s First Production Car to Achieve Level 3 Autonomy // IEEE Spectrum [website]. 2017. URL: https://spectrum.ieee.org/cars-that-think/transportation/self-driving/the-audi-a8-the-worlds-first-production-car-to-achieve-level-3-autonomy (accessed on 11.03.2018).

2. RT in Russian. Former CIA, NSA Director: ‘We Kill People Based on Metadata’ // RT in Russian [website]. 2014. URL: https://russian.rt.com/article/31734 (accessed on 11.03.2018).

22. Saarikoski J. [et al]. On the Influence of Training Data Quality on Text Document Classification Using Machine Learning Methods // Int. J. Knowl. Eng. Data Min. 2015. No 2 (3), pp. 143–169.

23. Scheirer W. J., Jain L. P., Boult T. E. Probability Models for Open Set Recognition // IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI). 2014. No 11 (36).

24. Shademan A. [et al]. Supervised Autonomous Robotic Soft Tissue Surgery // Science Translational Medicine. 2016. No 337 (8), pp. 337ra64–337ra64.

25. Sharif M. [et al]. Adversarial Generative Nets: Neural Network Attacks on State-of-the-Art Face Recognition // CoRR. 2017. (abs/1801.00349).

26. Silver D. [et al]. Mastering the Game of Go with Deep Neural Networks and Tree Search // Nature. 2016. (529), pp. 484–503.

27. Vinyals O. [et al]. StarCraft II: A New Challenge for Reinforcement Learning // CoRR. 2017. (abs/1708.04782).

28. Yudkowsky E. Levels of Organization in General Intelligence (eds. B. Goertzel, C. Pennachin), Berlin, Heidelberg: Springer Berlin Heidelberg, 2007, pp. 389–501.

29. Russell S., Norvig P. Artificial Intelligence: A Modern Approach / S. Russell, P. Norvig, Moscow: Williams, 2007.

30. Hopcroft J., Motwani R., Ullman J. Introduction to Automata Theory, Languages and Computation / J. Hopcroft, R. Motwani, J. Ullman. 2nd edition, Moscow: Williams, 2008.

31. Tsyguichko V. Models in the System of Strategic Decisions in the USSR / V. Tsygichko, Moscow: Imperium Press, 2005.

32. Campaign to Stop Killer Robots [website]. URL: https://www.stopkillerrobots.org/ (accessed on 11.03.2018).

33. AI Stories // IBM. 2018. URL: https://www.ibm.com/watson/ai-stories/index.html (accessed on 11.03.2018).

34. RIKEN, Forschungszentrum Jülich. Largest Neuronal Network Simulation Achieved Using K Computer // RIKEN [website]. 2013. URL: http://www.riken.jp/en/pr/press/2013/20130802_1/ (accessed on 11.03.2018)

i. Also known as the Svoya Igra [Own Game] franchise in Russia – author’s note.

(votes: 2, rating: 5) |

(2 votes) |

The implications of advanced technologies for subversive activity

Close Encounters of the Third MillenniumThe Arms Race in Artificial Intelligence and Autonomous Systems has already Begun

AI Nationalism and AI Nationalization Are ahead“Artificial Intelligence” (AI) as the key factor in economic, geopolitical and military power of the coming decades

Why China Will Win the Artificial Intelligence RaceThe US is hopelessly trying to find a balance between its ideological dictates, visceral populism and next-generation knowledge